I created an interactive display of human anatomy in 3D, in which the section views of organs are revealed according to their physical depth.

This project investigates new ways to interact with virtual information in physical space. It integrates and overlays the digital “interface” on top of tangible experiences and spacial deformations.

My Role

Concept Development, Design, Coding, Fabrication

Tools

Arduino, Processing, Adobe Creative Cloud, Maya

Team

Myself

Duration

Nov 2016 - Jan 2017

Design Outcome

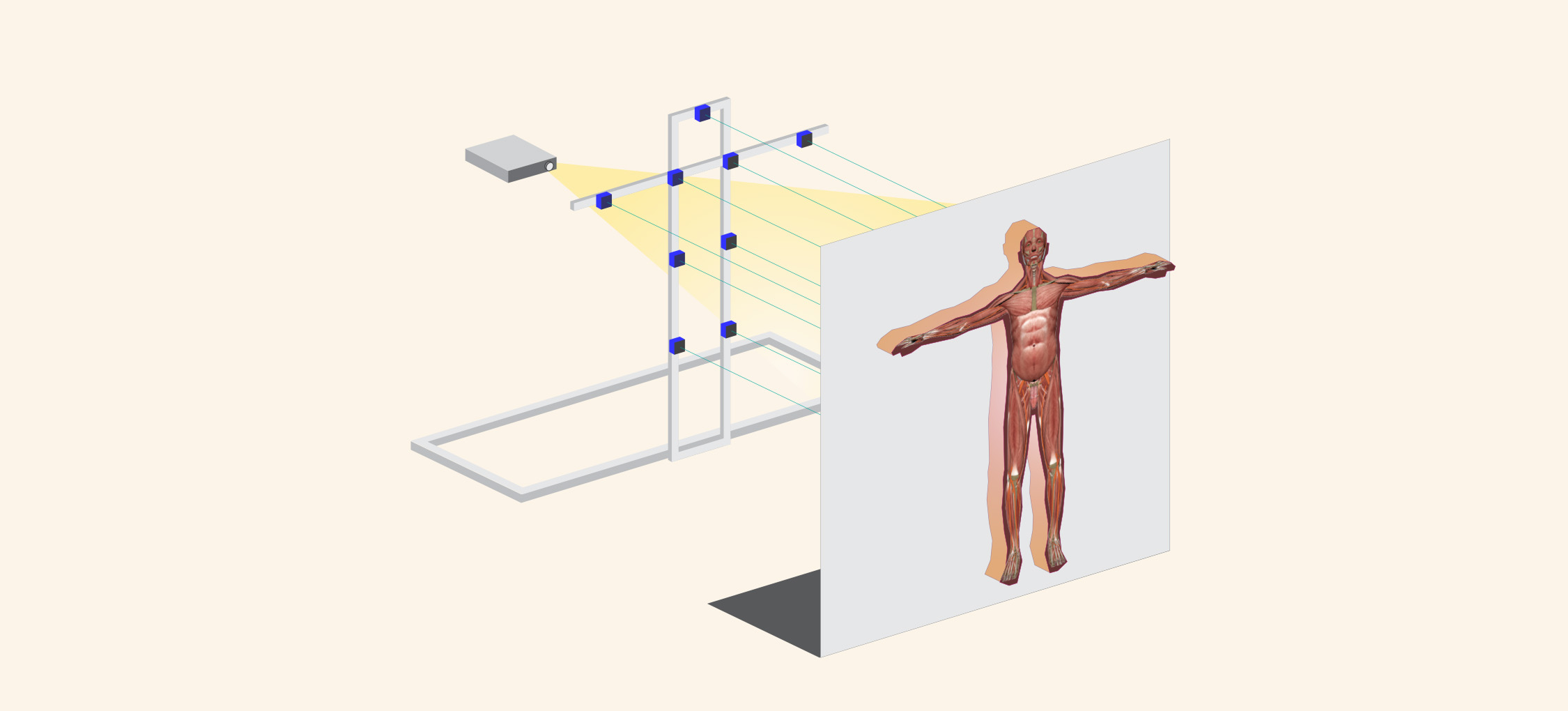

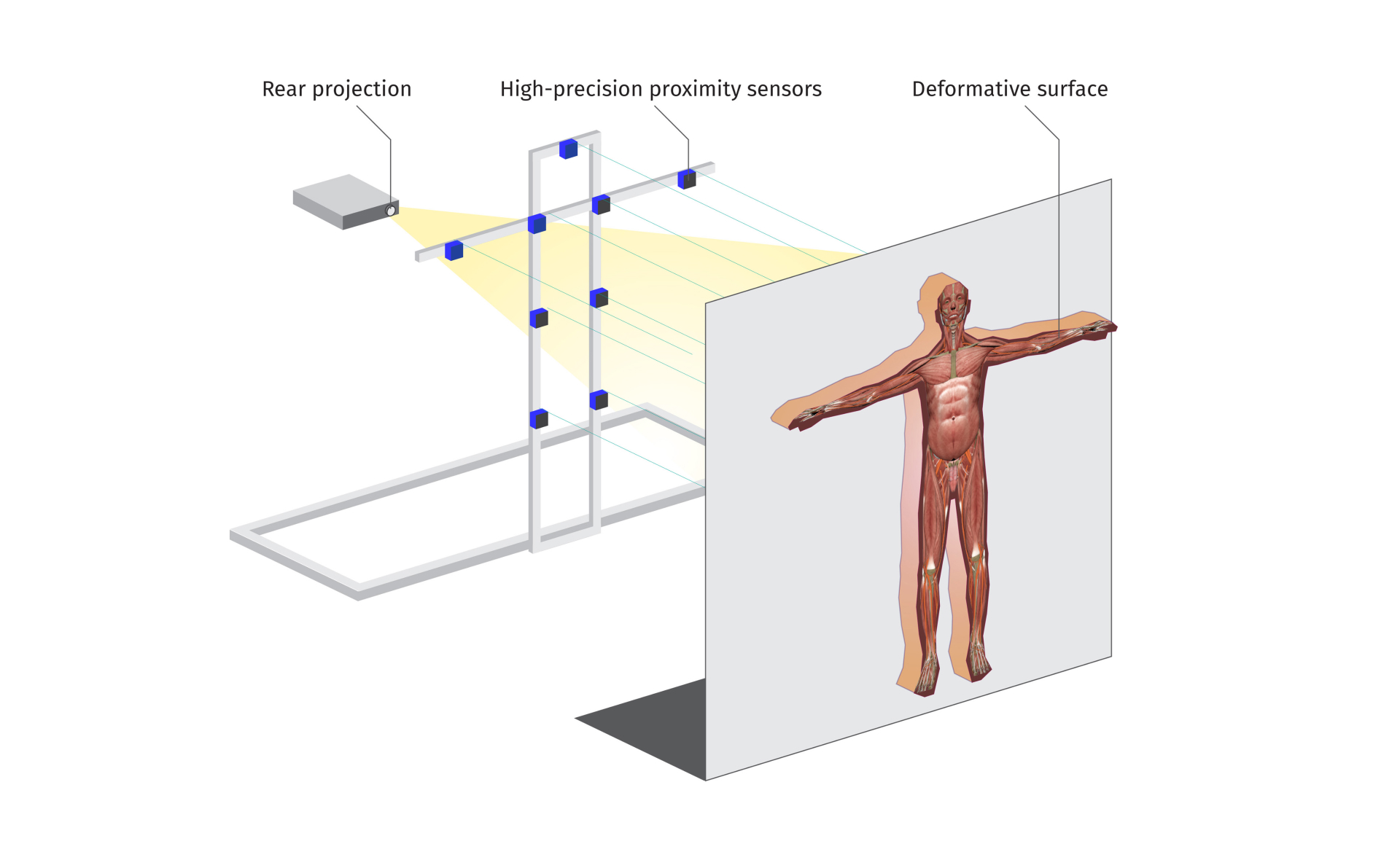

Atomu is an Augmented Reality installation that allows people to dissect and view human organs through touching the physical interface in 3D. The installation supports a life size human figure covered by deformable fabric, and the visual content is projected onto the fabric from behind.

Behind the elastic fabric - on which interactive graphics are projected - there are 12 proximity sensors installed to track user's hand movement. Depending on how deep the users press into the fabric, the depth data is collected and sent to Processing, to select the section views of the human body at the corresponding depth.

Demo Video

Exhibtions

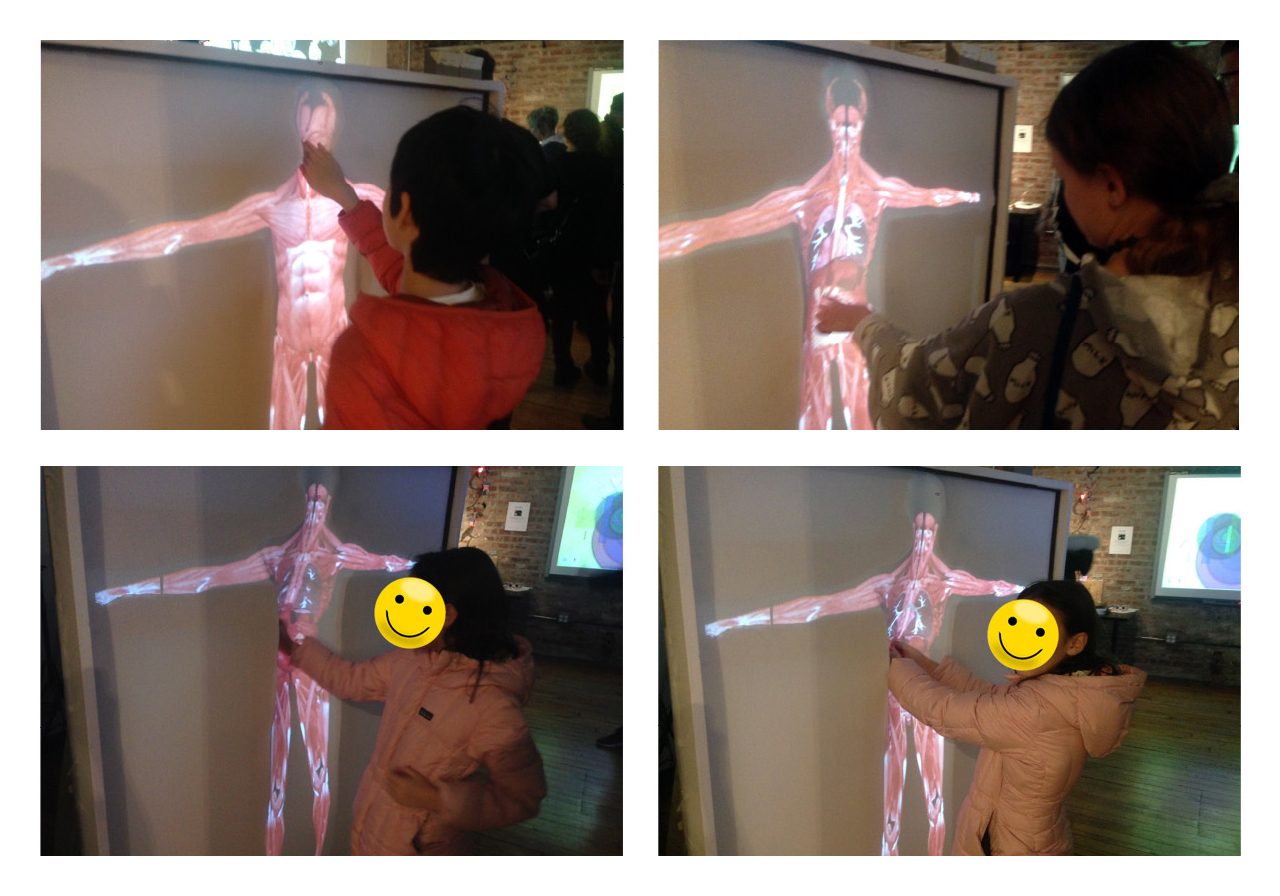

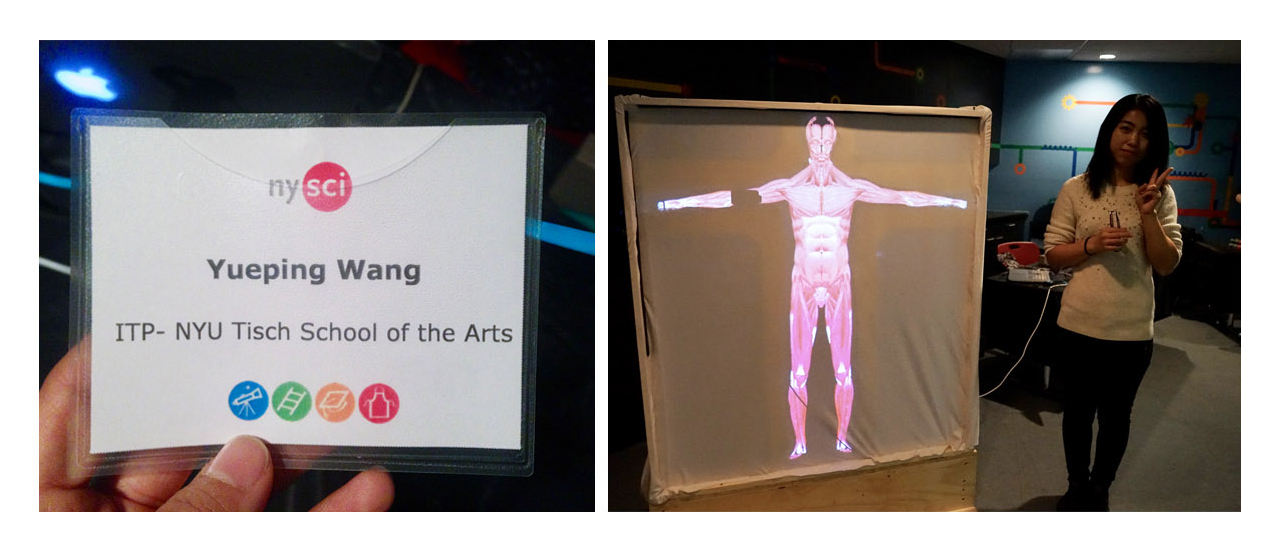

Demo at NYU ITP - Winter Show

At New York Hall of Science

After its debut at the ITP Winter Show 2016, Atomu received many exciting feedback from visitors. It was also selected to attend the exhibition at New York Hall of Science (NYSCI), during its STEM Night event.

Hundreds of high school students came to NYSCI during the exhibit, and I had the chance to teach them the technology I used to build this installation.

Design Process

Process Anatomic Models

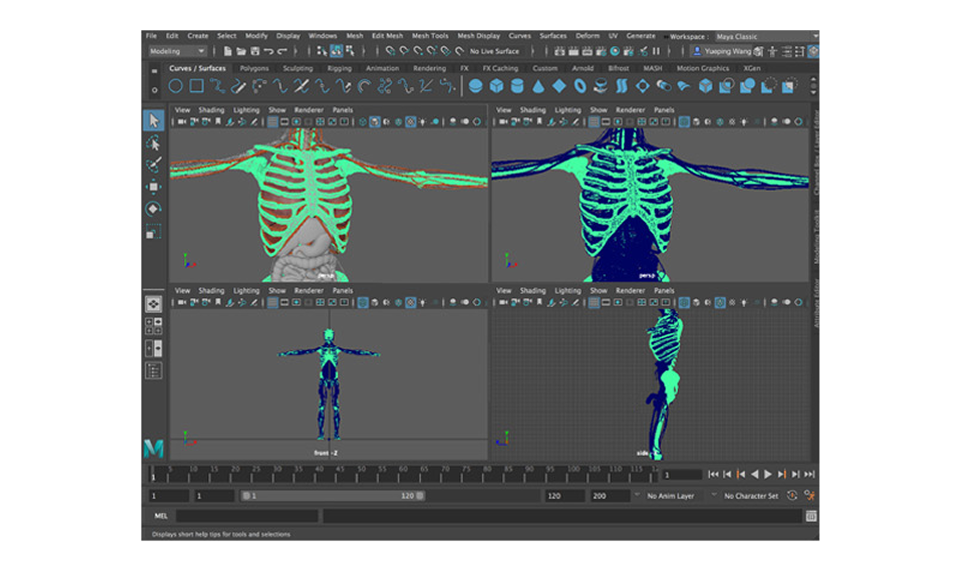

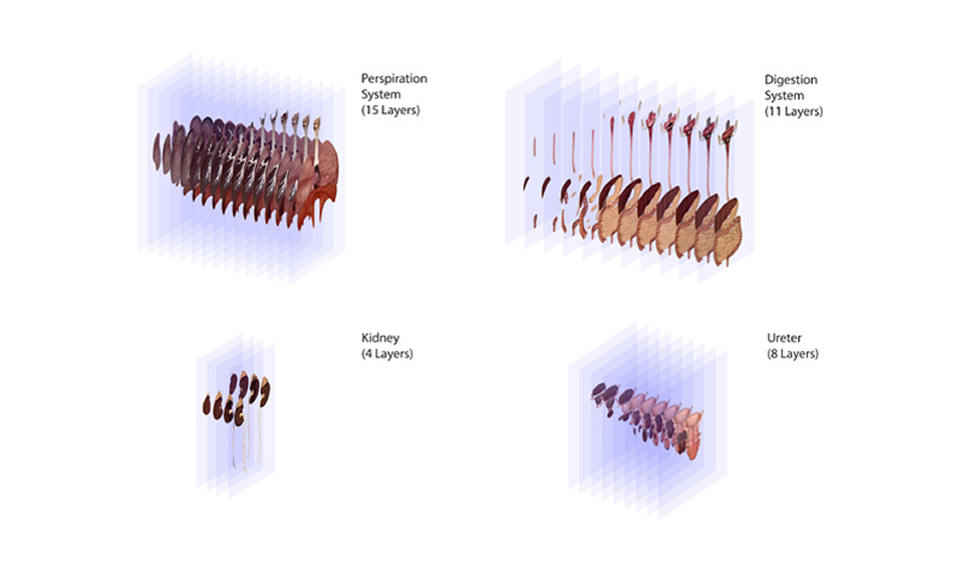

To create a image database for the interface, 3D models of human organs are first sliced and rendered into 22 discrete layers, using the Maya software.

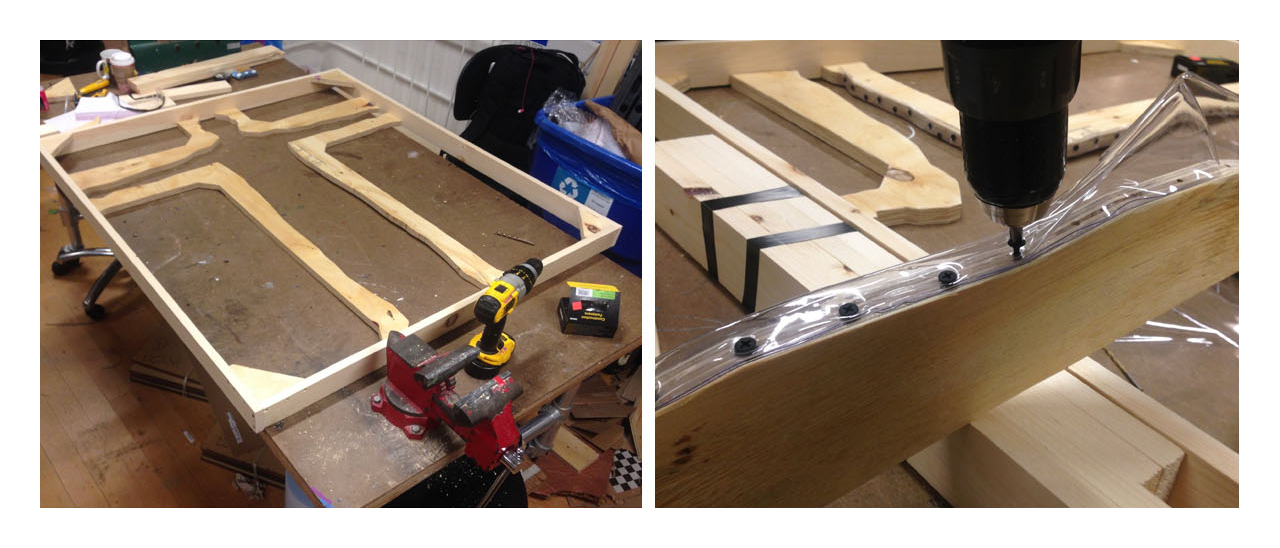

Digital Fabrication with CNC

Using computer-aided fabrication tools, I constructed an almost human-sized rigid wooden frame to support the deformable tactile interface.